Do you need to build your own MCP server?

I feel most of products and businesses should start building their MCP servers and exploring the right use cases. This blog tries to ignite a spark.

In my previous blog I tried to describe MCP without using the words like “Protocol”, “Standard” etc. This blog is going to be a complete flip from that. It is intended for those who seek to build their own MCP servers.

As we established, The Model Context Protocol (MCP) is a standardized protocol that connects AI to external systems via a client-server handshake.

Why should you build your MCP server?

A MCP server is your door to integrate AI directly with your systems, solving real-world problems. It could be for operational efficiency, customer experiences, system integrations or a lot more.

GitHub created one to streamline developer tasks, enabling AI to automate pull requests, issue tracking, and repo analysis, boosting productivity across their platform.

Figma built theirs to let MCP clients like Cursor analyze live design files, automating design-to-code workflows and documentation for developers.

If an MCP server existed for these today -

A BookMyShow MCP Server could let users book AI recommended movies right from Claude Desktop.

A CRED MCP server can bring me a magic analysis of my credit card offers, statements, expenses right on an LLM with advanced reasoning.

A Razorpay MCP could let developers integrate Razorpay SDK without hassle on Cursor

A Zerodha MCP server would bring me all my investment data on an LLM which I could use to do a lot more than simple analysis.

A Zomato MCP server would mean I go to claude, use LLM abilities to find the best suited meal for me as per the health plan recommendation by Healthify MCP Server and and order it right from there.

Oh By the way if these MCP servers existed, as a User I would go to claude, order groceries on Zepto, use PhonePe MCP server to complete a payment, and a Splitwise MCP server to add a new split transaction with my flatmates.

It is worth knowing that, MCP server use cases are not always customer facing.

A Zepto/Blinkit MCP server can expose warehouse APIs for AI to predict stock shortages, syncing supplies to avoid delivery delays

Flipkart’s internal ‘Customer support MCP server’ could 10x their support team’s efficiency.

A BigBasket MCP server could connect inventory APIs to AI for real-time spoilage predictions, minimizing waste across warehouses

A PharmEasy MCP server could link prescription APIs to AI for automated compliance checks, cutting audit times.

Your reason to build an MCP server hinges on what your business demands - efficiency, integration, or innovation. To me, MCP servers are becoming like APIs themselves: optional today, foundational tomorrow. Businesses didn’t always need websites before the internet, but today, we register a domain first, then the business ;)

I Want an MCP Server, But What the Heck is an MCP Server?

So, you’re sold on building an MCP server - great call!

If you are not from tech, to make most of this section - grab a reading partner from your human engineering team.

Let’s clear the fog: what is the Model Context Protocol?

This time we don’t oversimplify by not using words like “Protocol” like I did in my previous blog.

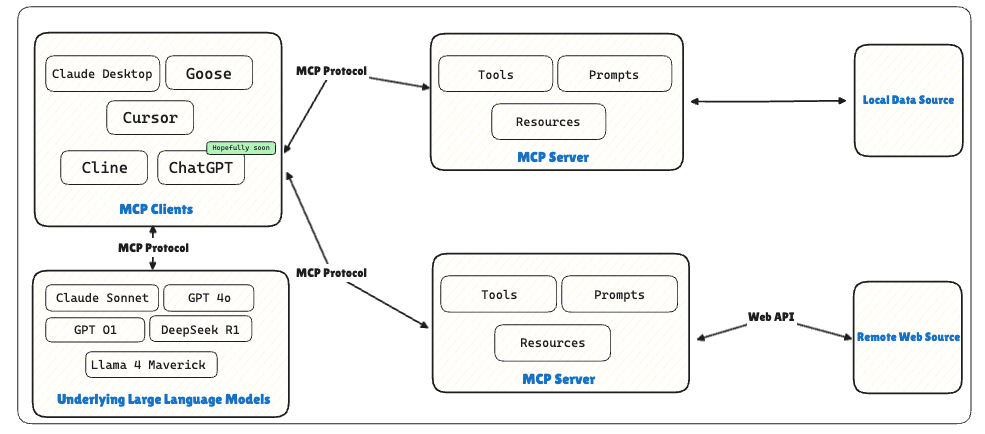

MCP is a standardized protocol that lets foundational Large Language Models like GPT4 (Through MCP Client) tap into your APIs without the distributed, non standard integration headaches. An MCP server is the backend engine you build - Typically a thin layer that exposes your APIs to AI via clean JSON-RPC calls. It uses websockets for real-time or stdio for local integrations.

The Core Pieces - How It’s Wired

MCP splits into three parts, like a circuit board:

Host: Your AI app (e.g., Claude Desktop) with an MCP client inside. This is the brain sending commands.

Server: A separate process (e.g., a GitHub MCP Server) exposing what the AI can use.

Link: JSON-RPC ties them, using WebSockets for real-time or stdio for local runs.

It starts with a handshake: client pings “initialize,” server replies with its capabilities (e.g., “I can read files, run tools”)

The Functions - What It Does

MCP’s power lies in its three pillars.

Tools: Client says, “Create a GitHub issue,” passing {“repo”: “myproj”, “title”: “Bug”}. Server hits the API, returns an ID. Tool are sync calls, so handle timeouts smartly.

Resources: Client asks, “Give me open issues,” with a filter. MCP Server fetches from API, sends back JSON.

Prompts: MCP Client finds a template (e.g., “summarize in bullets”) to guide AI.

Few things on Security

MCP uses OAuth 2.1 underneath. The MCP client kicks off a user consent flow, grabs tokens, and keeps them fresh. MCP Servers enforce tight scopes, like “read:inventory” or “write:claims,” so AI can’t overstep.

The Edge - Why It Beats the Old Way

Ever lost sleep over a leaky API Tool in your agentic system ? I have

MCP’s protocol flips that. It’s direct: client talks to server, no middleman leaks. I built a database MCP server in hours using Anthropic’s Python SDK.

Because it is based on JSON RPC, it can live updates (Server Sent Events) to keep information with AI up to date.

MCP as seen by an Engineer -

Below is a straight-to-the-point, engineer-centric rundown of what the Model Context Protocol (MCP) does and why you’d care.

1. The Quick Why

Standardization: MCP is your one-stop spec for hooking AI into your backend - no more spaghetti of custom integrations around agentic frameworks.

Ecosystem: It’s basically the “Language Server Protocol” for AI, but for exposing data and actions to LLMs.

2. Building Blocks

Server: A thin wrapper/layer over API which offers data (resources), does stuff (tools), and shares prompt templates.

Client: The AI agent or LLM app that consumes your server’s goodies.

Connection: Typically a WebSocket or stdio session. Both sides speak JSON-RPC 2.0.

3. The Handshake

Initialization: Client → “Hey Server, what can you do?”

Capabilities: Server → “I can fetch these resources, run these tools, share these prompts.”

Ready to Rock: The AI starts calling your server’s functions, pulling data, or using your prompt templates.

4. The Big Three

Resources: Think “read-only endpoints” with well-defined JSON data (e.g., tickets in a bug tracker).

Tools: Functions the server can perform on behalf of the AI (e.g., create a new issue, run a build).

Prompts: Templated conversation snippets the server can provide (handy for standardizing or guiding AI output)

Writing a basic MCP server is quite simple and examples are present in anthropic’s official page for MCP.

To build for scale, maintenance, security here’re a few tips

Some Tips

Progress Notifications: Let the client know how a long-running call is doing.

Cancellation: If the user changes their mind, your server should handle a polite “Nope, never mind.”

Logging: Keep track of calls for debugging or auditing

Tools can be potent (think of them as remote code execution).

Tool descriptions can be untrusted unless they come from your verified server.

Limit what server-side code can see in the prompt to protect user data.

Configuration: Make your server configurable- some features might be turned on or off per environment.

Consent & Authorization: Build robust flows for user approval before any data or tool usage.

Error Handling & Logging: Return meaningful JSON-RPC errors, log actions for debugging or auditing

Need help in creating a robust MCP ecosystem for your business / product?

Feel free to contact me at harsh.joshi.pth@gmail.com [or don’t :)]

P.S - We talked a little more about MCP server in the blog, but try asking yourself - “Would you rather build an MCP Client?”